In October 2019, Google rolled out the biggest algorithm update in 5 years. It will impact 10% of search queries, specifically conversational and contextual queries as well as featured snippets. This update will help Google understand natural language better and will be particularly effective for voice search queries.

A month on from the update and there has been a lot of SEO chat around how it will really affect digital marketers and SEOs. The conclusion is that actually, it won’t affect them very much – at least in the short term. However, in terms of the wider impact on the digital world, it’s quite a big deal.

In this article, we’re going to look at the context behind the BERT update to determine just how ‘big’ this change really is.

What is the background to BERT?

As well as a Google algorithm update, BERT is also a research paper, open-source research project and a machine learning natural language processing framework.

In full BERT stands for ‘Bidirectional Encoder Representations from Transformers’ and is a tool which helps search engines better understand the nuance and the context of words in searches.

This natural language processing framework was part of an open-source scheme developed by Google, against the context of the whole natural language processing field to better understand natural language as a whole.

The framework has been pre-trained on the whole of the English Wikipedia data set, which totals around 2500 million words. In order to fine-tune it, it’s been fed with detailed question and answer data sets too. The main dataset is called MS Marco, built and open-sourced by Microsoft which feeds in Bing searches. It’s worth noting that other huge AI companies are building BERTs too, such as RoBERTa from Facebook.

For a detailed look at the history of BERT check out this article from Search Engine Journal.

How can BERT help the future of search?

The main strength of BERT is that it can help Google understand words more thoroughly. Words can be synonymous and ambiguous and out of context mean not really very much at all.

We understand the meaning of words through common sense and context but to Google they don’t really mean anything unless they’re used in a sentence.

BERT really helps to build connections between words and recognise the connection between words that share similar neighbours. BERT has the edge on other language modellers because it uses bi-directional patterns rather than ‘uni-directional’ modelling. This means that instead of looking at a word in a context of the word one side of it, it analyses all the words before and after it.

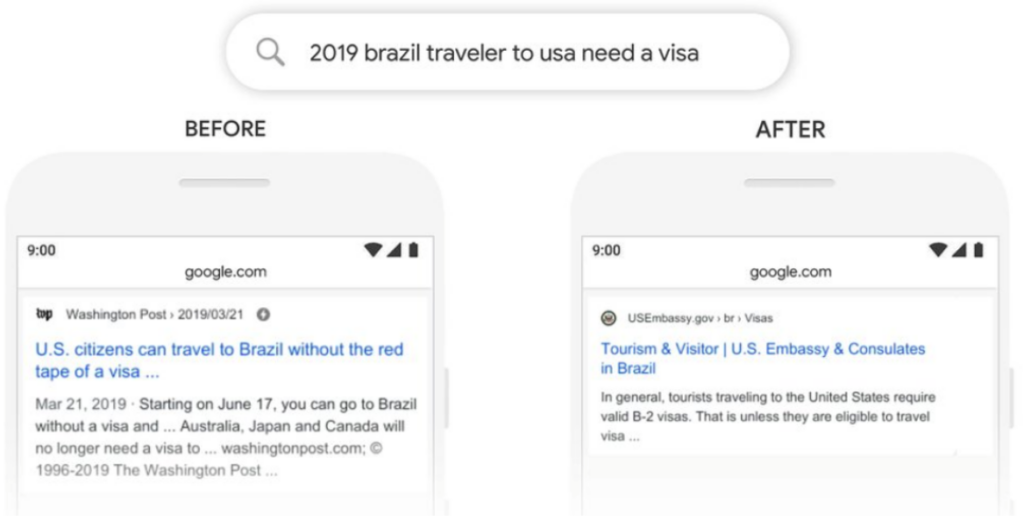

Search example here: “2019 brazil traveller to USA need a visa.”

Pre-Bert Google would have had difficulty with the word ‘to’ in this context and would have misunderstood as a US citizen travelling to Brazil. Post BERT, Google can assess this nuance and return the most logical results for the query:

This means it has the ability to solve ambiguous sentences and phrases that are made up of words with many meanings.

How will BERT impact search?

What can we do as SEOs and online marketers to work with it and maintain our strength in search?

Bert will help scale conversational search and therefore have a massive impact on voice search. It could also help with international SEO because of its mono-multi linguistic ability. Lots of patterns in one language will translate into others.

Google will also be able to understand the nuanced context of queries and the context so the quality of traffic to the site should improve. Your site will appear in the rankings only when really relevant and when you’re actually meeting the search query.

How should you approach SEO post-BERT?

Follow these core principles to help your site’s organic visibility in a post BERT landscape.

- Write as naturally as possible.

- Avoid over-optimising in an attempt to boost your rankings. With the added sophistication that BERT will add, it will be easier for Google to assess whether a page is really relevant to a query or not.

- If you’re not already, ensure that when you carry out keyword research you’re as thorough as possible.

- Find keywords in groups of related words and try to use an entire ‘eco-system’ of keywords on a page where you can.

According to Britney Muller at Moz it’s important not to over-hype BERT. Instead, focus on the wider context of SEO and what it means for the development of search engines.

While we can’t do a huge amount to optimise for it specifically, we can simply remember to write useful, relevant and reliable content for our users.

Bristol-based SEO experts

At Loom, we have over a decade of experience in SEO and digital marketing. We’d be more than happy to help you develop your digital marketing strategy.

For guidance on how to improve your SEO strategy post-BERT SEO update, get in touch with our marketing experts today, or call us on 0117 923 2021.